True though that might be, the app was both an instant success in terms of the number of interested parties but also a categorical failure due to the widespread backlash of such a technology.

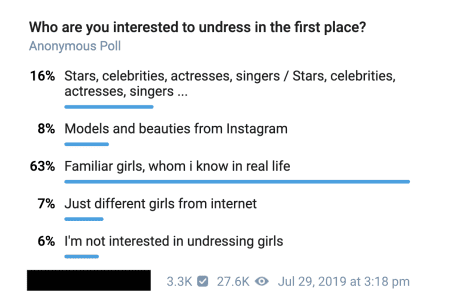

Detecting clothing and estimating the size, shape and position of all body parts.Users could then pay $50 to have the watermarks of the app removed so not only did you have a fake nude photo but you had one that couldn’t be identified as being fake at all. From there a sophisticated algorithm using generative adversarial networks to remove the subject’s clothes leaving you with a fake nude photo. The app, available for free, was simple to use and you just needed to upload a photograph of a clothed woman to start the process. On Twitter, Facebook, Google News, and Instagram.Early in 2019, a new app hit the market called DeepNude that promised users the power of X-ray vision. If only there were a way to disable all the versions out there," CCRI tweeted.įollow HT Tech for the latest tech news and reviews, also keep up with us "The #Deepnude app is out there now and will be used, despite the creator taking it off the market. The app's INTENDED USE was to indulge the predatory and grotesque sexual fantasies of pathetic men."ĭeepNude offered a free version of the application as well as a paid version, and was the latest in a trend of "deepfake" technology that can be used to deceive or manipulate.Īlthough the app was shut down, critics expressed concern that some versions of the software remained available and would be abused. Mary Anne Franks, a law professor and president of the CCRI, tweeted later, "It's good that it's been shut down, but this reasoning makes no sense. "This is a horrifically destructive invention and we hope to see you soon suffer consequences for your actions," tweeted the Cyber Civil Rights Initiative, a group that seeks protection against nonconsenual and "revenge" porn. We don't want to make money this way."Īrticles in The Washington Post, Vice and other media showed how the app could be used to take a photo of a clothed woman and transform that into a nude image, sparking outrage and renewed debate over nonconsensual pornography.

"Despite the safety measures adopted (watermarks), if 500,000 people use it, the probability that people will misuse it is too high.

0 kommentar(er)

0 kommentar(er)